A while back I'd done some shallow reverse engineering of Copilot

Now I've done a deeper dive into Copilot's internals, built a tool to explore its code, and wrote a blog answering specific questions and pointing out some tidbits.

https://thakkarparth007.github.io/copilot-explorer/posts/copilot-internals

Do read, might be fun! https://twitter.com/parth007_96/status/1546762772708413440

In particular, I try to answer (a) what goes into Copilot's prompt, (b) how does it invoke the model (c) how is Copilot's success rate measured and (d) does Copilot include code snippets in its telemetry [yes, it does, but there's more] /2

30s after every accept/reject of a suggestion, copilot "captures" a snapshot (a hypothetical prompt & completion) around the insertion point, likely as training data

I also find that copilot queries "cushman-ml", strongly suggesting it's a 12B parameter model in the backend /3

I might be making some incorrect guesses, but I provide code pointers to almost everything I talk about. So if you find holes, do let me know and I'll update the post!

Explore the reverse engineered codebase here: https://thakkarparth007.github.io/copilot-explorer/codeviz/templates/code-viz.html#mmain&pos=1:1

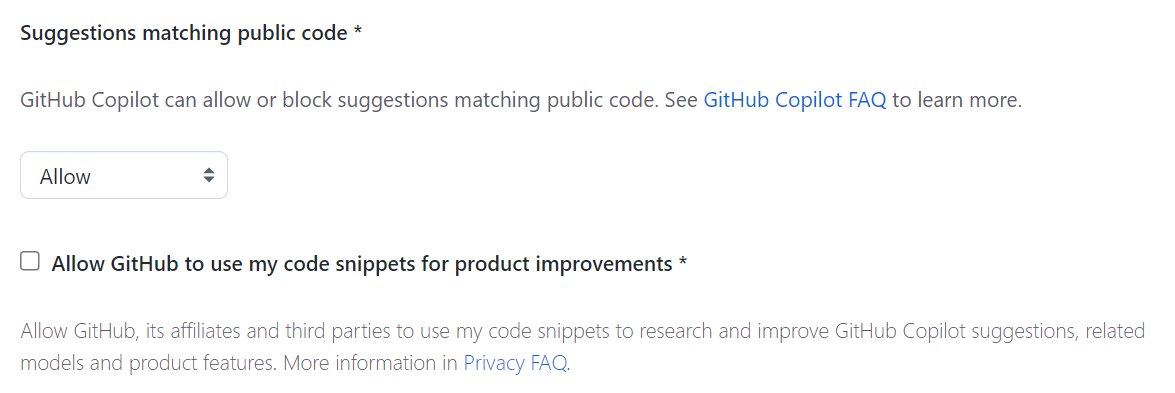

I should clarify that Github does let you control if your code snippets get used for training or not. I couldn't find if the client itself scrubs the snippets from telemetry based on your preferences, or if it does get logged in telemetry but doesn't get used for training

Okay, inspected further. The scrubbing happens at the client itself. If you've opted out of the above option, the snippets don't leave your machine. I'll update the post clarifying this.

I looked at the snippet-collection logic again and confirmed that if you have opted out of "your snippets being used for product improvements" (https://github.com/settings/copilot) then the snippets do not leave your machine. I've updated the post to reflect this finding.

100% of Profits Are Donated To Research-Backed Charities.